| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 |

- 데이터

- featureimportance

- 텍스트마이닝

- agglomerative clustering

- RapidMiner

- nrcemotionlexicon

- pythonlearner

- datacrawling

- LDA

- 래피드마이너

- 인과분석

- 티스토리챌린지

- 머신러닝

- 커스텀오퍼레이터

- 토픽모델링

- customeoperator

- 데이터분석

- customoperator

- 올라마

- 통계개념

- causalanalysis

- 채용공고분석

- 데이터크롤링

- sentimentanalysis

- 파이썬러너

- 오블완

- GoEmotions

- 감성분석

- llma

- htmltags

- Today

- Total

마이와 텍스트마이닝

'HelloTalk' 앱 리뷰 토픽모델링 | Topic Modeling LDA [Part 1] 본문

오늘은 제 논문의 연구에 대해서 말씀드리겠습니다. HelloTalk 언어 교환 앱의 리뷰를 분석했습니다. 연구 절차는 다음과 같아요. 이 글에 이 스텝들을 설명하려고 하겠습니다~ 이 글에 데이터 수집, 전처리 그리고 토픽모델링 부분에 대해서 만 이야기 하겠습니다.

1. 데이터 수집 [Data Collection]

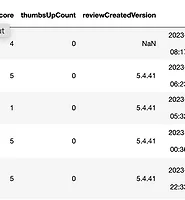

데이터를 google-play-scraper 패키지를 사용하여 Google Play Store의 HelloTalk 앱 리뷰를 크롤링하여 수집했습니다.

df = pd.read_csv('hellotalk.csv')

2. 데이터 전처리 [Data Pre-processing]

- 데이터 정리 및 전처리:

- 불용어, 테그 이모지 제거

def remove_emojis(text):

return emoji.replace_emoji(text, replace='')

def remove_tags(text):

text = re.sub(r'(@\S+) | (#\S+)', r'', text)

return text

def remove_stopwords(text):

# removing the stop words

text_data_without_stopwords = " ".join([word for word in text.split() if word.lower() not in stop_words])

return text_data_without_stopwords

df['content'] = df['content'].astype(str)

df['content_cleaned'] = df['content'].apply(remove_stopwords)

df['content_cleaned'] = df['content_cleaned'].apply(remove_emojis)

df['content_cleaned'] = df['content_cleaned'].apply(remove_tags)

- 구두점, 특수 문자, 숫자 등 제거

df['content_cleaned'] = df['content_cleaned'].apply(lambda x: clean(x,

fix_unicode=True,

to_ascii=True,

lower=True,

no_urls=True,

no_emails=True,

no_phone_numbers=True,

no_numbers=True,

no_digits=True,

no_currency_symbols=True,

no_punct=True,

lang="en"))

df['content_cleaned'] = df['content_cleaned'].str.replace(r'<number>', '', regex=True)- 결측값 처리

df = df[df['content_cleaned'] != '']

df.reset_index(drop=True, inplace=True)

nan_check = df['content_cleaned'].isna().sum()

print(f"Number of NaN values in 'content_cleaned': {nan_check}")

3. 토픽모델링 LDA [Topic Modeling LDA]

데이터 전처리를 완료한 후, 이제 LDA(Latent Dirichlet Allocation)를 활용하여 토픽 모델링을 수행했습니다. 이 과정을 통해 각 리뷰가 속하는 토픽과 그 토픽에 대한 가중치 값을 계산하는 것이 주요 목표입니다.

- 토픽모델링 LDA:

- 표제어 추출

- 토큰화

# Converting contents to list

data = df.content_cleaned.values.tolist()

def sentences_to_words(sentences):

for sentence in sentences:

sentence = gensim.utils.simple_preprocess(str(sentence), deacc=True)

yield(sentence)

import gensim

data_words = list(sentences_to_words(data))

print(data_words[:2])

import spacy

from gensim.utils import simple_preprocess

from collections import defaultdict

def lemmatize_words(texts, stop_words, allowed_postags=['NOUN','VERB','ADJ','ADV']):

"""Lemmatization of the given texts"""

texts = [[word for word in simple_preprocess(str(doc),min_len=3) if word not in stop_words] for doc in texts]

texts_out = []

nlp = spacy.load("en_core_web_sm")

for sent in texts:

doc = nlp(" ".join(sent))

texts_out.append([token.lemma_ for token in doc])

return texts_out

data_lemmatized = lemmatize_words(data_words, stop_words)

data_lemmatized

빈 리스트의 인덱스를 찾아서 제거하기:

empty_lists_count = sum(1 for lst in data_lemmatized if not lst)

print(f"Number of empty lists: {empty_lists_count}")

# Finding empty list indices

empty_list_indices = [index for index, lst in enumerate(data_lemmatized) if not lst]

print(empty_list_indices)

# Delete empty list indices from the data list

data_lemmatized = [item for i, item in enumerate(data_lemmatized) if i not in empty_list_indices]

df.drop(empty_list_indices, inplace=True)

df.reset_index(drop=True, inplace=True)

이제 각 데이터에 대해 표제어 추출과 토큰화가 완료된 리스트가 있습니다. 이 리스트의 이름은 'data_lemmatized' 입니다.

Bigram 만들기

import gensim

content_bigram=gensim.models.Phrases(data_lemmatized)

content_trigram=gensim.models.Phrases(content_bigram[data_lemmatized])

content_bigram_model=gensim.models.phrases.Phraser(content_bigram)

content_trigram_model=gensim.models.phrases.Phraser(content_trigram)

from gensim import corpora

content_bigram_document=[content_bigram_model[nouns] for nouns in data_lemmatized]

content_bigram_document[:10]

bigram_list=[]

for i in range(len(content_bigram_document)):

for j in range(len(content_bigram_document[i])):

if "_" in content_bigram_document[i][j]:

bigram_list.append(content_bigram_document[i][j])

# Bigram List

list(set(bigram_list))

# Creating Dictionary

dictionary = gensim.corpora.Dictionary(content_bigram_document)

count = 0

for k, v in dictionary.iteritems():

print(k, v)

count += 1

if count > 10:

break

# Bag of Words

bow_corpus = [dictionary.doc2bow(doc) for doc in content_bigram_document]

bow_corpus[2]

# TF-IDF

from gensim import corpora, models

tfidf = models.TfidfModel(bow_corpus)

corpus_tfidf = tfidf[bow_corpus]

from pprint import pprint

for doc in corpus_tfidf:

pprint(doc)

break

from gensim.models import CoherenceModel

def compute_performance(corpus, dictionary, texts, limit, start=2, step=1):

coherence_values = []

perplexity_values = []

model_list = []

for num_topics in range(start, limit, step):

lda_model = gensim.models.ldamodel.LdaModel(corpus=corpus,

id2word=dictionary,

num_topics=num_topics,

random_state=12,

iterations=100,

per_word_topics=True)

model_list.append(lda_model)

coherence_model = CoherenceModel(model=lda_model, texts=texts, dictionary=dictionary, coherence='c_v')

coherence_values.append(coherence_model.get_coherence())

perplexity_values.append(lda_model.log_perplexity(corpus))

return model_list, coherence_values, perplexity_values

Coherence 그래프에서 가장 높은 점수는 0.5392로 나타났습니다. 이를 기준으로 토픽 수를 5개로 결정하였습니다.

LDA 모델을 토픽 5개로 설정하기:

# LDA Model

topics_df_tfidf = pd.DataFrame()

lda_model_tfidf =gensim.models.ldamodel.LdaModel(corpus=corpus_tfidf,

id2word=dictionary,

num_topics=5,

random_state=12,

iterations=100,

per_word_topics=True)

for idx, topic in lda_model_tfidf.print_topics(num_words=30):

topic = topic.split("+")

topic = [t.strip().replace('"', '').split("*") for t in topic]

topic_words = [word.strip() for _, word in topic]

topics_df_tfidf[f"Topic {idx}"] = topic_words

from pprint import pprint

pprint(lda_model_tfidf.print_topics(num_words = 50))

# OUTPUT

[(0,

'0.034*"excellent" + 0.029*"cool" + 0.028*"easy_use" + 0.021*"fun" + '

'0.006*"concept" + 0.005*"awsome" + 0.005*"helping" + 0.005*"interface" + '

'0.004*"teacher" + 0.004*"great_meeting" + 0.004*"simple" + '

'0.003*"waste_time" + 0.003*"excelente" + 0.003*"laggy" + 0.003*"muy_buena" '

'+ 0.003*"absolutely_love" + 0.003*"server" + 0.003*"else" + '

'0.003*"super_helpful" + 0.002*"haha" + 0.002*"major" + 0.002*"local" + '

'0.002*"excelent" + 0.002*"awhile" + 0.002*"bar" + 0.002*"character" + '

'0.002*"problem_solve" + 0.002*"useless" + 0.002*"need_improvement" + '

'0.002*"generally" + 0.002*"johayo" + 0.002*"card" + 0.002*"saya" + '

'0.002*"sugoi" + 0.002*"line" + 0.002*"overseas" + 0.002*"awesone" + '

'0.002*"attempt" + 0.002*"brilliant_idea" + 0.002*"recommend_everyone" + '

'0.002*"converse" + 0.002*"unique" + 0.002*"hao" + 0.002*"complete" + '

'0.002*"none" + 0.002*"year_old" + 0.002*"prilozhenie" + 0.002*"exceptional" '

'+ 0.001*"mode" + 0.001*"fabulous"'),

(1,

'0.089*"good" + 0.070*"great" + 0.050*"love" + 0.030*"learn" + '

'0.029*"language" + 0.018*"really" + 0.015*"learning" + 0.013*"people" + '

'0.012*"way" + 0.012*"help" + 0.012*"new" + 0.011*"application" + '

'0.010*"thank" + 0.010*"meet" + 0.010*"useful" + 0.010*"practice" + '

'0.010*"lot" + 0.009*"friend" + 0.009*"make" + 0.008*"far" + '

'0.008*"new_friend" + 0.007*"world" + 0.007*"native_speaker" + '

'0.006*"language_exchange" + 0.006*"improve" + 0.006*"helpful" + '

'0.006*"many" + 0.006*"meet_new" + 0.006*"much" + 0.006*"well" + '

'0.005*"make_friend" + 0.005*"english" + 0.005*"one" + 0.005*"talk" + '

'0.005*"speak" + 0.005*"find" + 0.004*"japanese" + 0.004*"spanish" + '

'0.004*"connect" + 0.004*"idea" + 0.004*"interesting" + 0.004*"learner" + '

'0.004*"different" + 0.004*"like" + 0.003*"culture" + 0.003*"want" + '

'0.003*"skill" + 0.003*"people_around" + 0.003*"also" + 0.003*"use"'),

(2,

'0.066*"amazing" + 0.013*"work" + 0.010*"super" + 0.006*"superb" + '

'0.005*"brilliant" + 0.005*"definitely" + 0.005*"french" + 0.005*"lovely" + '

'0.004*"fix_bug" + 0.004*"edit" + 0.004*"okay" + 0.004*"keep_crash" + '

'0.004*"nic" + 0.003*"correction" + 0.003*"appear" + 0.003*"together" + '

'0.003*"mandarin" + 0.003*"recent_update" + 0.003*"registration" + '

'0.003*"receive_notification" + 0.003*"turn" + 0.003*"face" + 0.003*"site" + '

'0.003*"work_fine" + 0.003*"greet" + 0.003*"teaching" + '

'0.003*"extremely_helpful" + 0.002*"ever_since" + 0.002*"restart_phone" + '

'0.002*"battery" + 0.002*"quite" + 0.002*"functionality" + 0.002*"suggest" + '

'0.002*"design" + 0.002*"buggy" + 0.002*"everyday" + 0.002*"create" + '

'0.002*"use" + 0.002*"well" + 0.002*"rather" + 0.002*"ever_see" + '

'0.002*"decide" + 0.002*"besides" + 0.002*"black" + 0.002*"encounter" + '

'0.002*"date" + 0.002*"thank" + 0.002*"hindi" + 0.002*"gain" + '

'0.002*"boost"'),

(3,

'0.040*"nice" + 0.018*"helpful" + 0.017*"like" + 0.015*"language" + '

'0.014*"people" + 0.014*"learn" + 0.009*"really" + 0.009*"perfect" + '

'0.007*"easy" + 0.007*"tool" + 0.007*"wonderful" + 0.007*"great" + '

'0.007*"talk" + 0.006*"fantastic" + 0.006*"lot" + 0.006*"ever" + 0.006*"use" '

'+ 0.006*"help" + 0.005*"meet" + 0.005*"practice" + 0.005*"want" + '

'0.005*"much" + 0.005*"make" + 0.005*"many" + 0.005*"find" + 0.005*"well" + '

'0.004*"friendly" + 0.004*"exchange" + 0.004*"get" + 0.004*"bad" + '

'0.004*"way" + 0.004*"good" + 0.004*"love" + 0.004*"wow" + 0.004*"one" + '

'0.004*"improve" + 0.004*"fun" + 0.004*"feature" + 0.004*"highly_recommend" '

'+ 0.004*"world" + 0.003*"speak" + 0.003*"friend" + 0.003*"enjoy" + '

'0.003*"new" + 0.003*"connect" + 0.003*"partner" + 0.003*"native" + '

'0.003*"chat" + 0.003*"also" + 0.003*"foreign"'),

(4,

'0.022*"awesome" + 0.009*"message" + 0.009*"useful" + 0.008*"use" + '

'0.008*"get" + 0.007*"update" + 0.007*"problem" + 0.007*"work" + 0.007*"log" '

'+ 0.006*"even" + 0.006*"one" + 0.006*"well" + 0.006*"try" + 0.006*"fix" + '

'0.005*"please" + 0.005*"see" + 0.005*"please_fix" + 0.005*"time" + '

'0.005*"keep" + 0.005*"open" + 0.005*"say" + 0.004*"like" + 0.004*"language" '

'+ 0.004*"new" + 0.004*"would" + 0.004*"make" + 0.004*"want" + 0.004*"chat" '

'+ 0.004*"account" + 0.004*"register" + 0.004*"send" + 0.004*"people" + '

'0.004*"help" + 0.004*"notification" + 0.004*"really" + 0.004*"could" + '

'0.004*"need" + 0.004*"change" + 0.004*"ca" + 0.004*"issue" + 0.004*"thank" '

'+ 0.003*"download" + 0.003*"show" + 0.003*"still" + 0.003*"hellotalk" + '

'0.003*"learn" + 0.003*"know" + 0.003*"let" + 0.003*"phone" + '

'0.003*"sometimes"')]

topics_df_ = pd.DataFrame()

for idx, topic in lda_model_tfidf.print_topics(num_words = 50):

topic = topic.split('+')

topic = [t.strip().replace('"', '').split('*') for t in topic]

topic_words = [word.strip() for _, word in topic]

topics_df_[f"Topic {idx + 1}"] = topic_words

topics_df_

pyLDAvis를 사용하여 LDA 모델의 시각화를 준비하고 출력하는 과정입니다. pyLDAvis는 LDA 모델의 토픽들을 보다 직관적이고 이해하기 쉬운 형태로 시각화할 수 있는 도구입니다. 생각보다 괜찮게 나왔습니다~

import pyLDAvis

import pyLDAvis.gensim_models as gensimvis

# LDA Visualization

pyLDAvis.enable_notebook()

lda_display = gensimvis.prepare(lda_model_tfidf, corpus_tfidf, dictionary)

pyLDAvis.display(lda_display)

Wordclouds

# 1. Wordcloud of Top N words in each topic

from matplotlib import pyplot as plt

from wordcloud import WordCloud

import matplotlib.colors as mcolors

cloud = WordCloud(

background_color='white',

width=2500,

height=1800,

max_words=50,

colormap= 'plasma',

prefer_horizontal=1.0)

topics = lda_model_tfidf.show_topics(formatted=False, num_words=50)

fig, axes = plt.subplots(3,2, figsize=(10,10), sharex=True, sharey=True)

for i, ax in enumerate(axes.flatten()):

fig.add_subplot(ax)

topic_words = dict(topics[i][1])

cloud.generate_from_frequencies(topic_words, max_font_size=1000)

plt.gca().imshow(cloud)

plt.gca().set_title('Topic ' + str(i), fontdict=dict(size=16))

plt.gca().axis('off')

plt.subplots_adjust(wspace=0, hspace=0)

plt.axis('off')

plt.margins(x=0, y=0)

plt.tight_layout()

plt.show()

마지막으로 각 데이터의 토픽 가중치를 계산하고, 프로젝트의 다음 단계에서 사용할 데이터프레임을 생성했습니다.

content_values = list(lda_model_tfidf[corpus_tfidf])

rows = []

for doc_idx, (topic_weights, _, _) in enumerate(content_values):

max_weight = max(topic_weights, key=lambda x: x[1])[1]

dominant_topics = [idx for idx, weight in topic_weights if weight == max_weight]

if len(dominant_topics) > 1 and max_weight == min(max_weight for idx, max_weight in topic_weights):

# Birden fazla eşit ağırlığa sahip topic varsa "multiple topics" olarak işaretle

dominant_topic_index = "multiple topics"

else:

dominant_topic_index = max(dominant_topics)

row = {

**{f"Topic_{idx}": weight for idx, weight in topic_weights},

"Dominant_Topic": dominant_topic_index

}

rows.append(row)

df_topics = pd.DataFrame(rows)

df_topics.index = df_topics.index

df_topics = df_topics[::-1]

df_topics.reset_index(drop=True, inplace=True)

df_topics.head(-10)

'텍스트마이닝' 카테고리의 다른 글

| 쿠팡 앱 리뷰 토픽모델링 분석 (23) | 2024.09.21 |

|---|---|

| 4차 산업혁명 동향: 채용 공고 분석 (11) | 2024.09.20 |

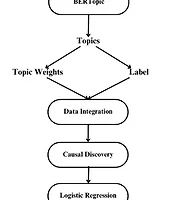

| BERTopic과 인과분석: 정신 건강 문제에 영향을 미치는 요인 탐색 (3) | 2024.08.26 |

| 'HelloTalk' 앱 리뷰 감성분석 | Sentiment Analysis using NRC Emotion Lexicon and GoEmotions Dataset [Part 2] (6) | 2024.08.07 |

| 감성분석 Sentiment Analysis - [Amazon Sales Data] (8) | 2024.05.09 |